Unlocking Gemini's Video Generation: Prompts, Seeds, and Developer Insights for Google Workspace

Unlocking the Black Box: Understanding Gemini's Video Generation Inputs for Developers

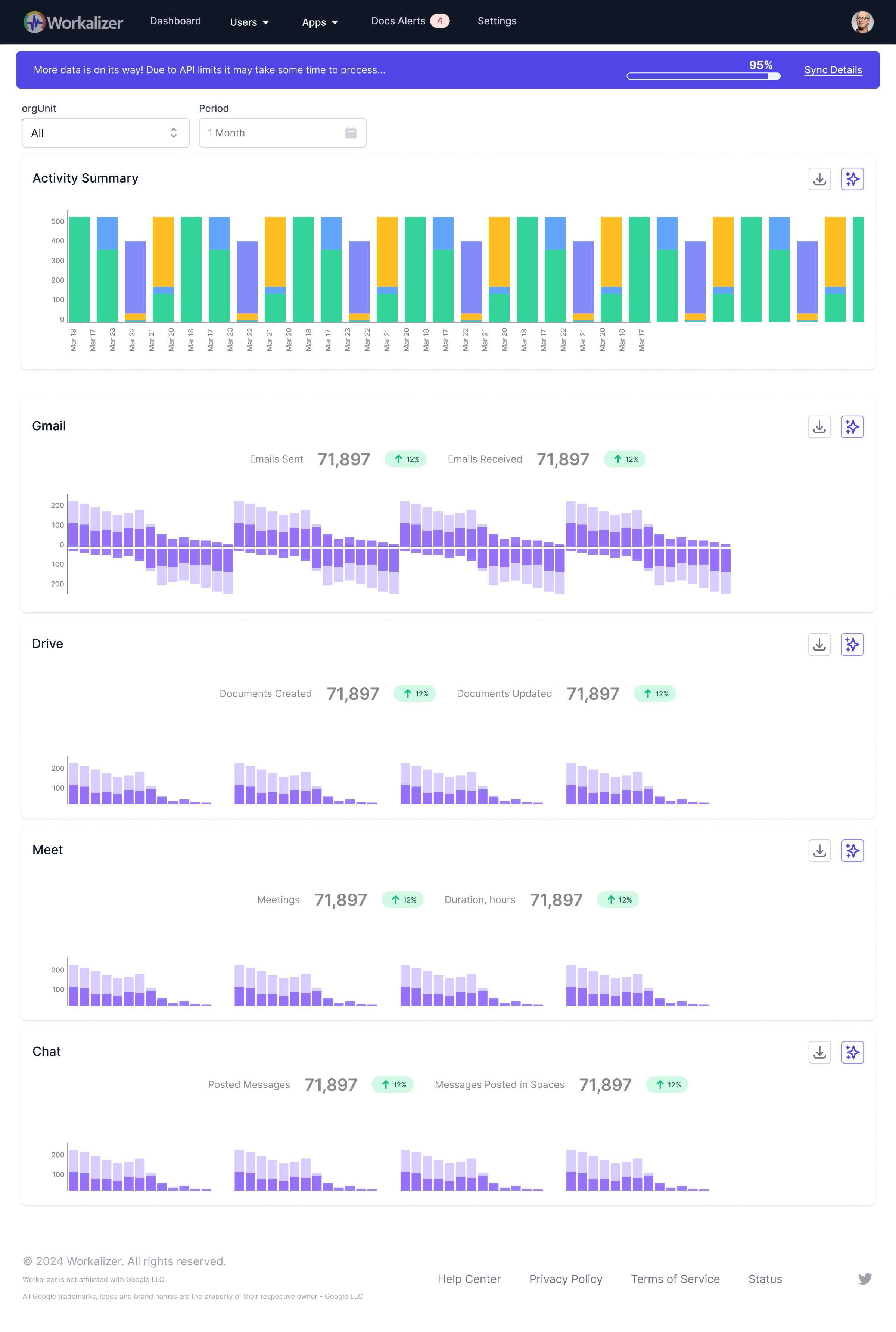

As Google Workspace continues to integrate advanced AI capabilities, tools like Gemini are transforming how we create content, from text to stunning visuals. For those diving into Gemini's video generation features, such as the VEO3.0 model, a common curiosity arises: how can we understand the underlying inputs that drive these sophisticated creations? Just as administrators monitor a gsuite status dashboard for insights into service health and performance, developers and power users often seek similar transparency into their AI tools.

A recent thread on the Google support forum highlighted this very inquiry, with a user asking how to find the exact input data—specifically the 'seed' and 'prompt'—that Gemini used to generate a video. This question touches upon a broader desire for control, reproducibility, and deeper understanding in AI-powered workflows, especially pertinent for those building integrations within Google Workspace.

Finding Your Prompt: The Visible Input

The good news is that locating the prompt you used to generate a video with Gemini is quite straightforward and immediately accessible. As one expert, Scorpions, explained in the forum:

- Scroll Up in Chat History: Your original prompt is always visible within your Gemini chat history. Simply navigate back through your conversation, and you'll find the exact text you provided.

This accessibility is crucial for iterating on ideas, refining your requests, and understanding how specific phrasing influences the AI's output. For content creators, this means easy experimentation and rapid prototyping. For developers, it ensures that the user-facing input is always auditable and can be referenced for debugging or feature expansion.

The Elusive Seed: A Behind-the-Scenes Process

While prompts are readily available, the 'seed' data presents a different story. The seed is an internal value, often a number, that initializes the random number generator used in AI models. It plays a critical role in the uniqueness and reproducibility of AI-generated content. If you use the same prompt and seed, the AI *should* ideally produce the same or a very similar output. However, for most Gemini users, this information remains a 'black box'.

According to the support thread, the seed is not visible in the standard Gemini application for privacy and technical reasons. It's handled automatically behind the scenes, ensuring a diverse range of outputs while abstracting away complex technical details from the average user. This design choice prioritizes ease of use and creative exploration over granular control for the general audience.

Developer Access: Unlocking the Seed via API

Here's where the distinction for developers and integrators becomes critical. As Scorpions noted, if you're a developer using the Gemini API, you *can* find the seed in the raw JSON metadata returned by the API. This level of access is paramount for advanced use cases:

- Reproducibility in Development: For developers building applications that leverage Gemini for video generation, knowing the seed allows for exact replication of results. This is invaluable for testing, debugging, and ensuring consistent outputs in production environments.

- Fine-Tuning and Iteration: With seed access, developers can systematically experiment with minor prompt variations while keeping the underlying random generation consistent, allowing for more precise control over the iterative design process.

- Custom Workflows: Integrating Gemini into larger systems often requires detailed logging and control. Access to the seed enables developers to log every parameter that went into a specific video generation, enhancing auditability and system understanding.

For users who aren't developers but want to recreate a specific look or style, the advice from the forum is to ask Gemini for the "expanded prompt" it used to build that specific scene. This provides a more detailed textual description that can serve as a proxy for the 'seed' in guiding future generations, albeit without the exact numerical control.

Why Understanding AI Inputs Matters for Development and Integrations

For the workalizer.com audience, particularly those focused on "development-integrations," the transparency (or lack thereof) regarding AI inputs like prompts and seeds is more than just a curiosity—it's a foundational aspect of building robust and reliable solutions. When integrating Gemini into custom applications or Google Workspace workflows, understanding these inputs directly impacts:

- System Reliability and Predictability: Developers need to anticipate how their integrated AI components will behave. Knowing the inputs, especially the seed, helps in predicting and controlling the output, which is vital for mission-critical applications.

- Debugging and Error Resolution: When an AI generates an unexpected or undesirable output, the first step in debugging is to examine the inputs. Without access to the seed, diagnosing issues in a black-box system can be significantly more challenging. This is akin to trying to troubleshoot a system without access to a g suite alert center – you're flying blind.

- Ethical AI and Compliance: In many industries, there's a growing demand for transparency in AI systems. Being able to trace the inputs that led to a specific output can be crucial for regulatory compliance, auditing, and ensuring ethical AI practices. Developers can build logging mechanisms around API-provided seeds to demonstrate accountability.

- Innovation and Customization: The ability to programmatically control and log seeds opens up new avenues for innovation. Developers can create sophisticated tools that manage AI generation parameters, build A/B testing frameworks for prompts, or even design systems that learn and adapt seed usage for optimal results.

As Google Workspace continues to evolve, the integration of powerful AI models like Gemini will only deepen. For developers, the ability to peer into the mechanics of these models, even just a little, through API access to data like the 'seed', is invaluable. It transforms a powerful tool into a controllable, predictable, and integratable component of a larger digital ecosystem.

Conclusion

The journey to understand Gemini's video generation inputs reveals a clear distinction: while the 'prompt' is a user-friendly, visible input for everyone, the 'seed' remains largely a developer-centric detail accessible primarily through the API. This design balances ease of use for the general public with the granular control and transparency required by developers and integrators. For those building solutions within Google Workspace, leveraging this API access to understand and manage AI inputs will be key to unlocking the full potential of Gemini and creating truly innovative and reliable integrations. As AI becomes more embedded in our daily workflows, the demand for such insights will only grow, pushing for greater transparency and control in the tools we use.